How to multi-instance learning

Have you ever heard of multi-instance learning when it comes to medical algorithms development? This approach is especially beneficial in fields where precise instance labels are hard to obtain, making it well-suited for handling weakly annotated data. New algorithms are being developed for MIL to handle this uncertainty, making it a valuable tool in machine learning and AI. Multi-instance learning (MIL) is a form of supervised learning where each training set, known as a “bag”, contains multiple instances but only one label per bag. Unlike traditional supervised learning, which relies on precise labels for individual instances, MIL enables predictions at the bag level.

The history of multi-instance learning

MIL was first conceptualized in the early 1990s by Keeler, James D., David E. Rumelhart, and Wee-Kheng Leow, in a study focusing on recognizing handwritten signs and digits. This foundational work, detailed in a paper from the Neural Information Processing Systems [1], introduced the concept of learning from ambiguous labels. Each “bag” of instances contained multiple examples of handwritten digits. The model was trained to predict whether at least one instance in each bag matched a target digit. Evidently, traditional segmentation methods struggle with touching, broken, or noisy characters. However, self-organizing learning offers a solution. It uses position-independent information to automatically group similar elements. What’s more it is possible even for overlapping characters, as shown with handwritten digits.

How does medical imaging benefit from MIL?

A critical application of MIL is in medical imaging, such as colonoscopy procedures [2], where a colon section may generate thousands of frames. Yet, the ground truth (GT) is only available at the section level, not for individual frames. This simplifies the labeling process, as medical professionals can label entire sections rather than each frame, thereby saving time and reducing the complexity of data annotation.

MIL limitations

Despite its advantages, MIL has limitations when integrated with deep learning models:

- Black Box Inference: Classifying bags of frames can be unclear. We can’t easily see which frames influenced the final decision. In other words, the decision-making process becomes a black box.

- Information Blending: The algorithm tends to merge information from all instances within a bag. Obviously, that can obscure the influence of significant but less frequent features.

Multi-instance learning – recent advancements

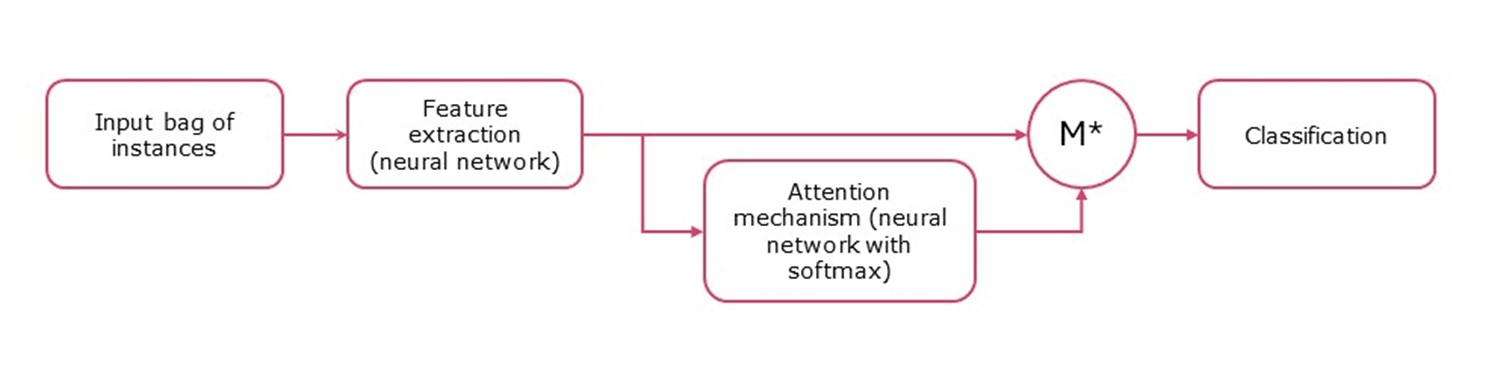

To address these issues, recent advancements have included the integration of attention mechanisms, as explored in the 2018 study by Ilse, Tomczak, and Welling titled “Attention-based Deep Multiple Instance Learning” (Fig.1). These mechanisms aim to enhance the model’s focus on relevant instances within a bag, improving model transparency by highlighting influential instances. However, if the attention weights are too similar across instances, it can still lead to interpretability challenges, as each frame might equally influence the final classification.

Fig.1 Attention-based MIL algorithm proposed by Ilse, Tomczak, and Welling (2018). Please note that the M* block is a weighted feature aggregation mechanism, often based on a simple multiplication of attention weights and features within a single bag of instances.

Multi-instance learning and its future in medical imaging

Future Enhancements for MIL in Medical Imaging:

- Improving Attention Weight Discrimination: Techniques to enhance the distinction of attention weights could help identify key instances within a bag more clearly. This might involve advanced training strategies or integrating multiple attention mechanisms (e.g. gated attention).

- Hybrid Models: Combining attention-based MIL with other interpretative machine learning frameworks could yield richer insights, capturing complexities within a bag more effectively.

- Transparent Reporting and Visualization: Tools that visualize attention weights alongside instances can provide direct feedback on what the model focuses on, increasing trust, especially in clinical settings.

- Utilizing Post-hoc Analysis: Methods like LIME or SHAP might be applied post-training to explain predictions, although their effectiveness can be limited if the model uses complex embeddings rather than straightforward features.

Conclusions

In conclusion, the integration of attention mechanisms in multi-instance learning represents a significant advancement in managing bags with a single label, crucial in complex fields like medical imaging. Continued research and development are vital for refining these models to improve their precision, usability, and transparency in medical diagnostics and treatment planning.

References:

[1] https://proceedings.neurips.cc/paper/1990/file/e46de7e1bcaaced9a54f1e9d0d2f800d-Paper.pdf

[2] https://graylight-imaging.com/medical-video-data-on-cloud-infrastructures/