Semi-automatic segmentation

By Marek Pitura

In the context of medical image analysis, you can encounter the term ‘semi-automatic segmentation’. Although automatic segmentation seems relatively intuitive, the “semi-automatic” description may cause some havoc for artificial intelligence algorithms. Therefore, we would like to provide a short explanation of what this semi-automatic property consists of and present examples of its use.

What is the semi-automatic segmentation?

First, let us define segmentation as this will be the task of our algorithm. Segmentation is the automatic outline of interesting image areas in medical images. This may be a specific tissue, organ and tumour.

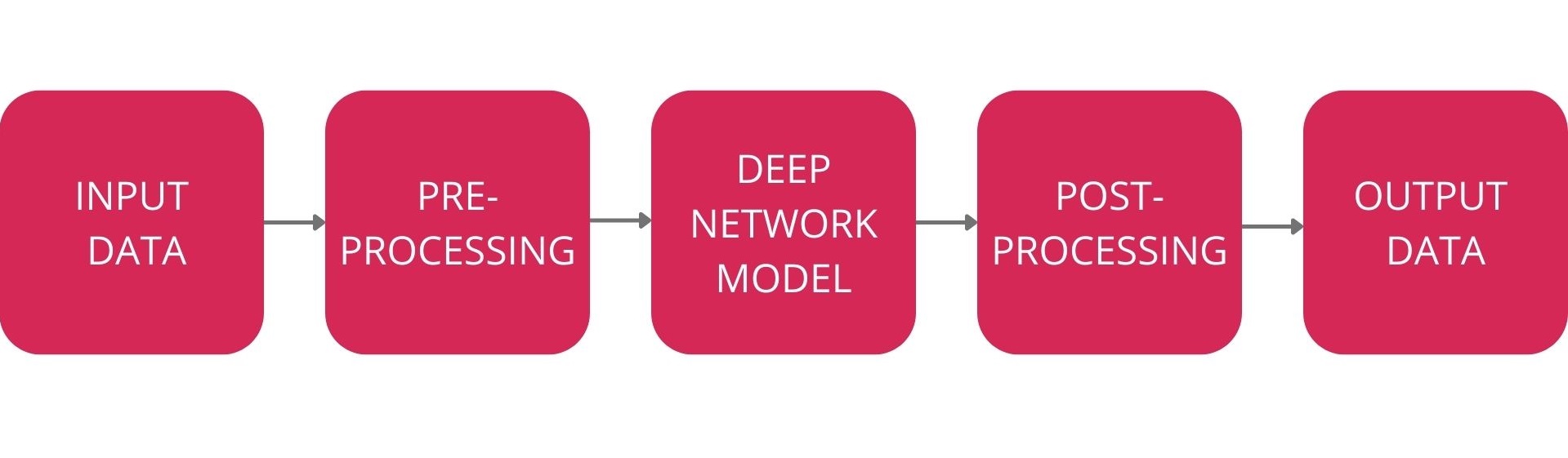

Next, to understand the essence of the semi-automatic segmentation, we need to have a look at the very algorithm used for outlining the interesting areas. When using methods based on deep learning techniques, it usually consists, to put it simply, of the following:

Initially, the algorithm receives image files, e.g. in DICOM format [1]. Raw data is pre-processed and standardised and then a pre-trained deep neural network (DNN) model is launched. Moreover, you may process its results further to generate end outlines. If the entire process takes place with no human intervention, this is an automatic segmentation. However, if any user interaction is required during the process, this is semi-automatic segmentation. Such interaction may take place e.g. during pre- or post-processing.

Pre-processing: required steps

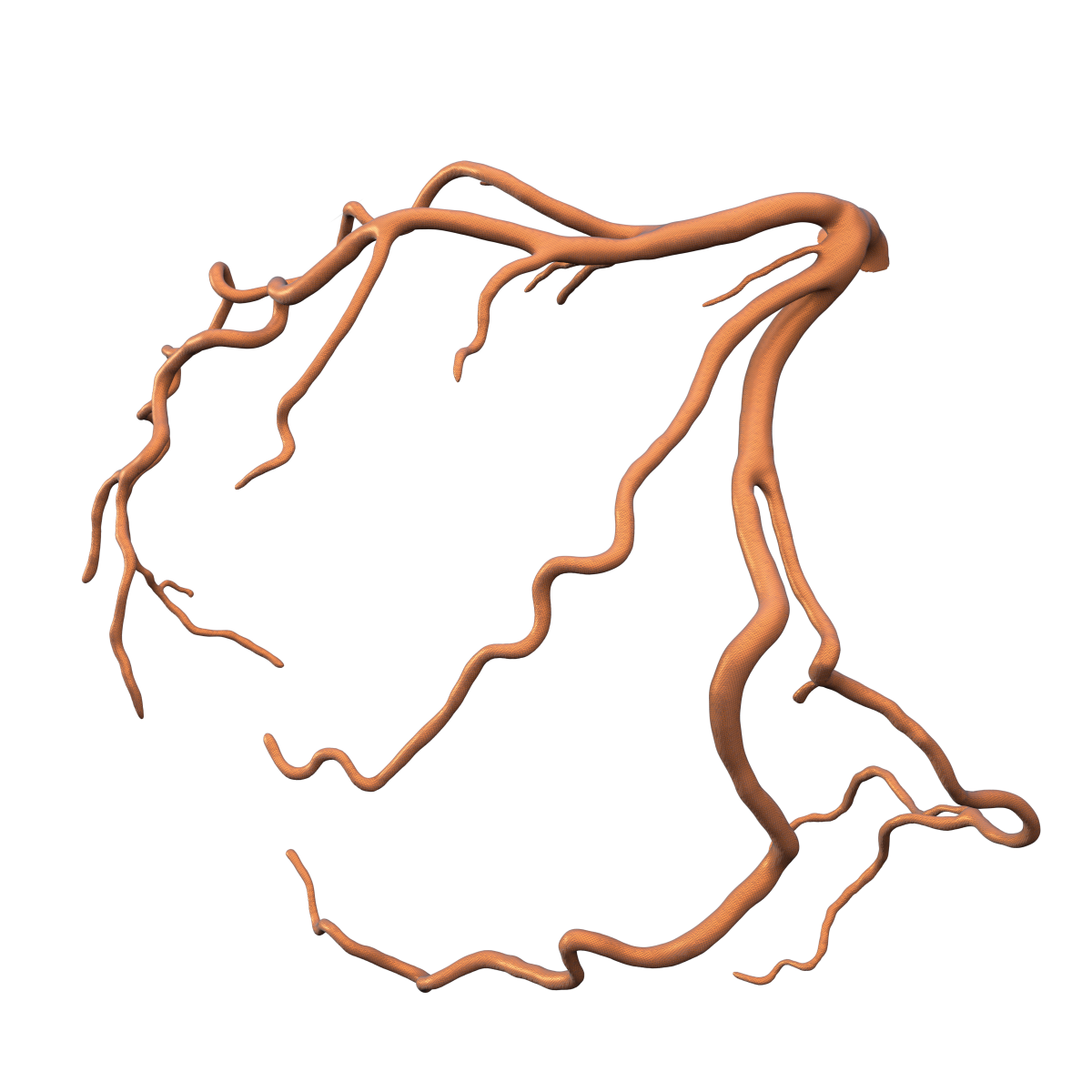

An example of a semi-automatic segmentation algorithm with a user interaction during pre-processing is an algorithm for coronary vessel segmentation in angio-CT examination prepared by us. Its input data is DICOM files with vessel centerline prepared manually by the user, i.e. a vessel route diagram.

Obviously, the preparation of such centerlines involves the user but it also provides more information to the deep network model and enables to obtain higher quality of vessel segmentation. The quality improves here at the user’s time expense. This approach is adopted e.g. for images of inferior quality where the user’s expertise and intuition relating to anatomy and vessel route provides support to the model based on artificial intelligence.

Post-processing: required steps

Examples of semi-automatic segmentation algorithm where the user interaction takes place during post-processing include all the cases where results need verifying or manual adjustment, e.g. to improve their precision. The results of the manually “improved” segmentation are then used by subsequent algorithms or for further calculations.

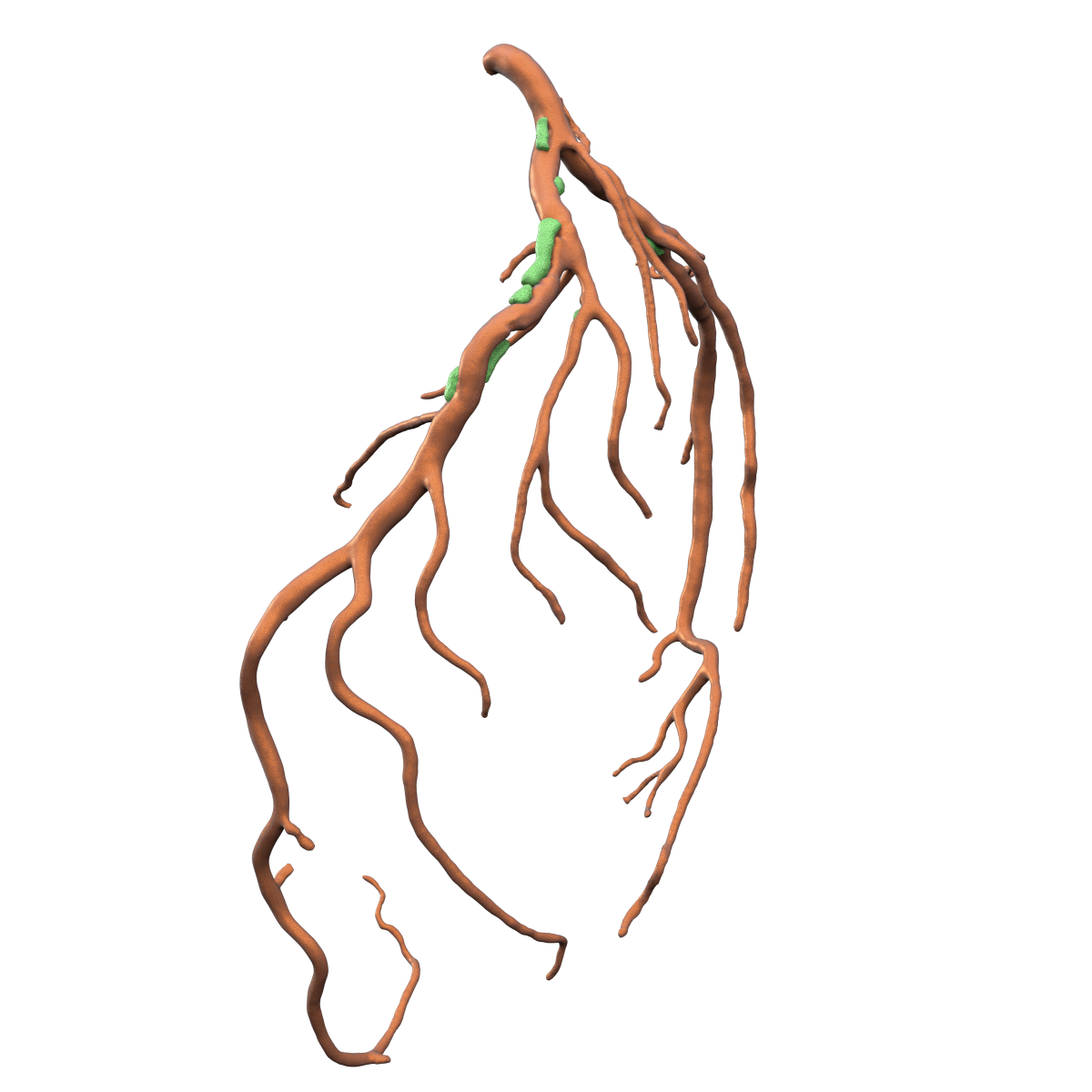

A good example here is the algorithms for the segmentation of coronary vessels and plaque buildup developed by Graylight Imaging’s team.

Coronary artery disease (CAD) is a leading cause of death globally. Atherosclerosis, the buildup of plaque within artery walls, plays a major role in this condition. Narrowing the passage for blood flow significantly impacts heart health.

The algorithm’s objective is to measure the vessel lumen at the atheroma site. What we require here, is the vessel itself and the atheroma obstructing it. Dedicated deep learning models trained on medical images segment both components. The user then sees the results and can confirm them or make manual adjustments using a specialized tool. Only after the approval, the solution calculates the vessel lumen at the indicated site.

To automate or not automate? Summary

To sum up this short introduction to the semi-automatic segmentation, it is worth stressing its advantages once again. It not only enables to improve or guarantee the suitable quality of the ultimate segmentations, but it can also reduce the processing time and the load on computing resources when compared to an algorithm which operates fully automatically, with no human support. Support is the key word here. Using human involvement, semi-automatic segmentation algorithms are able to supply the required solutions where the complete automation is not possible or where it does not bring about the required results.

References: