AI in Healthcare – news picked by GLI /02

Introducing second edition of “AI in Healthcare”, a collection of posts exploring the most recent developments and emerging trends of medical AI. Through this series, we aim to present you with a thoughtfully selected blend of news and perspectives, carefully chosen by the Graylight Imaging team.

AI in healthcare guidance? Lung cancer case

For many years, medical software development has led the way for the medical technology advancements. The NICE diagnostics guidance AI-derived computer-aided detection (CAD) software for detecting and measuring lung nodules in CT scan images [1] published 5th of July highlights that AI-derived computer-aided detection (CAD) software can be utilized to assist clinicians in finding and measuring lung nodules in CT scans as part of targeted lung cancer screening. AI-enhanced medical software has the capability to automatically identify and measure lung nodules on CT images, enabling healthcare professionals to make decisions regarding further investigations or surveillance. (If that sounds interesting you may take a look at one of our case studies).

Does it mean that AI in healthcare starts a new chapter? Not necessarily. The guidance advises against using AI-derived CAD software for people having a chest CT scan due to symptoms suggesting they may have lung cancer or for reasons not related to suspected lung cancer. The reason for this caution is the lack of sufficient evidence to recommend the software for such cases, as it may lead to identifying non-cancerous nodules and causing unnecessary anxiety in patients.

Patients concerns

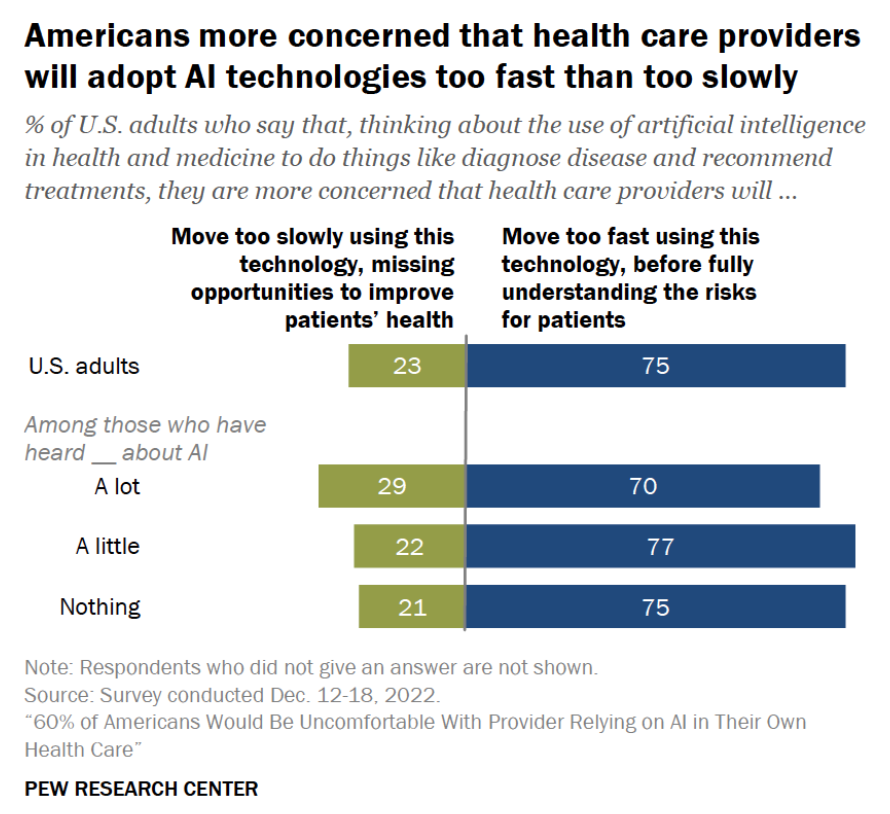

The insufficiency of definitive evidence is also reflected in public sentiment. According to the survey from February 2023 [2], a notable portion of Americans express discomfort about the utilization of medical AI. Six out of ten U.S. adults state that they would feel uneasy if their healthcare provider used artificial intelligence for tasks like diagnosing diseases and suggesting treatments. In contrast, a smaller proportion (39%) indicates they would feel comfortable with such AI implementation.

However, this anxiety may be caused also by the other aspect – legal regulations, which is perfectly visible when discussing Chat GPT. Moreover, we all can see that Large Language Models represent a new frontier in healthcare. We need to create an entirely new regulatory framework to ensure their safety for medical use. These unprecedented challenges and practical expectations discuss Medical Futurist in one of the recent posts [3].

Medical AI and cloud technology?

In the wake of these discussions of AI in healthcare, computing power should also be touched upon. Recent Signify report shows that currently the use of radiology IT on the public cloud is mostly limited to the academic and outpatient sectors [4]. The widespread adoption of the public cloud in the market has not yet reached a tipping point, primarily due to several significant barriers that continue to exist. The report outlines the main challenges that the market faces: patient data policies, storage costs etc. But more importantly: lack of real-world use cases of public cloud adoption. The report examines how technology vendors can help address challenges to cloud adoption in radiology. Furthermore, it investigates how collaboration among these vendors can increase cloud use in medical imaging as a whole.

// Stay tuned for our upcoming posts and prepare to be inspired by the incredible possibilities of medical AI.

References:

[1] https://www.nice.org.uk/guidance/dg55

[3] https://medicalfuturist.com/why-and-how-to-regulate-chatgpt-like-large-language-models-in-healthcare