Domain Adaptation

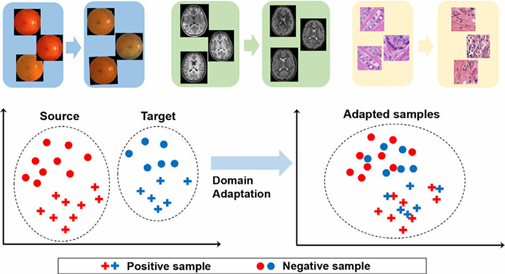

Medical imaging AI models often face challenges when applied to data from different hospitals, devices, or patient populations. Domain adaptation in medical imaging offers solutions to improve model robustness and generalizability.

What is Domain Adaptation in Medical Imaging?

Domain adaptation means teaching an AI model trained on one dataset to perform well on another dataset that looks different. It is about reducing the gap between the ‘source domain’ (training data) and the ‘target domain’ (new data), so that the model can handle differences like scanner type, imaging protocol, or patient population.

Why it matters in medical imaging compared to natural image processing:

Medical imaging data is far less standardized than natural images. Scanners, acquisition protocols, and patient demographics introduce variability that is much stronger than differences between everyday photographs. Without domain adaptation, AI models trained on one hospital’s images may not generalize to another, making it a more critical challenge than in typical computer vision tasks.

Techniques for Domain Adaptation

| Category |

Approach

|

How it Works

|

Example

|

| Data-level | Normalization & augmentation | Adjust images or add variation to mimic new domains | MRI intensity normalization; noisy low-dose CT |

| Synthetic / style transfer | Generate or restyle data to match the target | CycleGAN for stain adaptation | |

| Feature-level | Domain-invariant features | Learn representations common across domains | Lung nodule shape independent of the scanner |

| Adversarial training (DANN) | Fool a domain classifier to align feature spaces | MRI features aligned across vendors | |

| Model-level | Transfer & self-supervision | Fine-tune or pretrain on unlabeled data | Pretrained MRI model adapted to a new hospital |

| Advanced | Federated / test-time adap. | Train across sites or adapt during inference | Federated lung nodule detection across hospitals |

Benefits of Domain Adaptation for Healthcare

1. Improved diagnostic accuracy across institutions — ensuring consistent results even when scanners or protocols differ.

2. Better generalization to real-world clinical scenarios — models remain reliable in diverse hospital settings.

3. Reduced need for costly re-annotation — less manual labeling required for new datasets.

4. Faster deployment of AI solutions — models can be adapted quickly without complete retraining.

5. Increased trust and adoption by clinicians — consistent performance builds confidence in AI tools.

6. Support for rare or underrepresented cases — domain adaptation allows models to transfer knowledge to smaller or less common datasets.

Practical Applications and Case Studies

Here are some of the most prominent real-world unsupervised domain adaptation applications in medical imaging:

1. Brain lesion segmentation across MRI scanners — Kamnitsas et al. (2017) showed that adversarial networks can adapt models trained on one MRI scanner to another without using target labels.

2. Histopathology stain adaptation — CycleGAN-based methods successfully adapted slides between different staining protocols, improving cancer detection without target annotations.

3. Chest X-ray analysis — Unsupervised methods aligned datasets from different hospitals, reducing the domain gap caused by varied acquisition protocols.

4. Lung nodule detection in CT — Domain adaptation improved performance on low-dose screening CTs when models were trained only on high-dose scans.

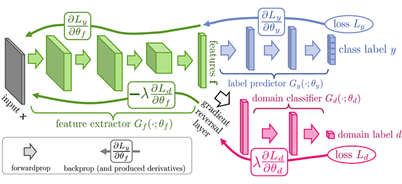

Example Methodology – Domain-Adversarial Neural Networks (DANN)

One of the most prominent examples of how domain adaptation can be applied is Domain-Adversarial Neural Networks (DANN). This approach becomes especially valuable when we do not have any labeled data in the target domain, which makes adaptation particularly challenging. DANN are a technique in machine learning that helps a model work well on data from different sources (domains).

The key idea is simple:

The model lhas two jobs at once:

- Learn to solve the main task (e.g., detect a tumor in an MRI).

- Hide the “domain identity” of the data (e.g., whether the MRI came from Hospital A or Hospital B).

To do this, the network is trained with an adversary:

- One part of the model tries to recognize which domain the data came from.

- Another part tries to fool this domain classifier, by learning features that look the same no matter the source.

As a result, the model ends up with domain-invariant features — representations that focus only on the medical problem (tumor vs. no tumor) and ignore irrelevant differences (scanner type, hospital, or patient demographics).

References:

- Ghafoorian M. et al. (2017). Transfer Learning for Domain Adaptation in MRI: Application in Brain Lesion Segmentation. IEEE Transactions on Medical Imaging.

- Kermany D.S. et al. (2018). Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell.

- Ganin Y., Lempitsky V. (2015). Unsupervised Domain Adaptation by Backpropagation. ICML.

- Ganin Y. et al. (2016). Domain-Adversarial Training of Neural Networks. Journal of Machine Learning Research.

- Sheller M.J. et al. (2020). Federated learning in medicine: facilitating multi-institutional collaborations without sharing patient data. Scientific Reports.

- Kamnitsas K. et al. (2017). Unsupervised domain adaptation in brain lesion segmentation with adversarial networks. IPMI.